Detailing Tech-Facilitated Harms: Direct and Indirect

This article details tech-facilitated direct harms (online GBV) and indirect harms (algorithmic bias, data bias, data security, gender-blind tech) against women and girls. This is a follow up article to Checking Under the Dashboard.

Direct Harm/ Gender-Based Violence (GBV)/ Violence Against Women (VAW)

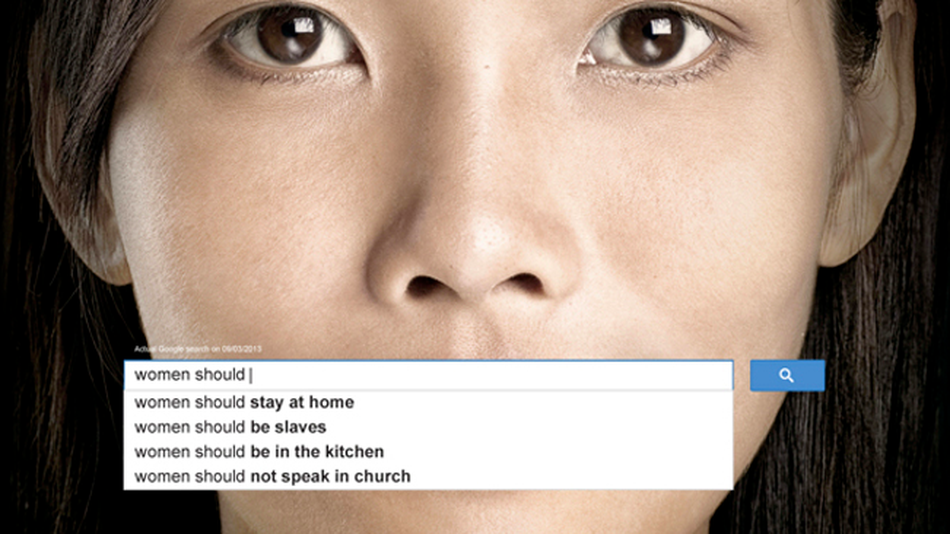

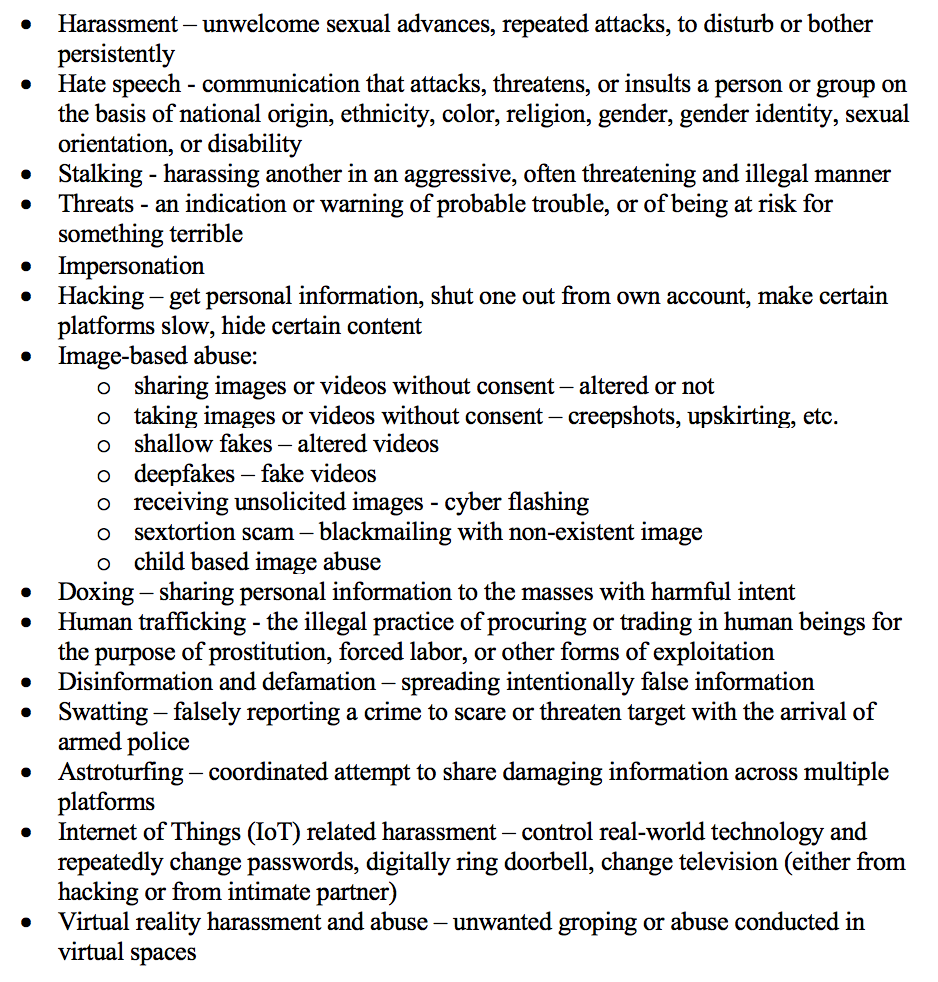

Direct harm against women and girls encompasses intentional violence such as: online harassment, hate speech, stalking, threats, impersonation, hacking, image-based abuse, doxing, human trafficking, disinformation and defamation, swatting, astroturfing, IoT-related harassment, and virtual reality harassment and abuse. These types of violence can be easily classified as GBV/ VAW, tech-facilitated GBV/VAW, or online GBV/ VAW because the intent behind the acts is clear, to harm an individual or group. There is a small, but growing body of work on these topics.

Groups that have been focused on this type of tech-facilitated direct harm for some time are the APC and journalists, although the work was historically not intended to focus on women, the targeting of women is common, thus a coalition of women journalists was recently formed against online violence. The child protection communities have also had a strong presence, as it relates specifically to children. Traditional GBV communities are also beginning to take up such topics since tech-facilitated GBV is caused by the same generational problem of power imbalance between men and women.

Included is a list of tech-facilitated direct harms that was compiled with sources coming from around the world including individual discussions, online events, and multiple conferences. This list is not meant to be exhaustive, merely to share information.

Indirect Harm/ GBV/ VAW

The way in which a majority of technology, especially digital tech, is being created today is amplifying gender inequalities and discriminating against women and girls. Despite preventing women from accessing jobs, funds, public services, and information, indirect harms against women from the misuse of data and tech is almost entirely overlooked.

Here we are talking about algorithmic bias (i.e. coded bias in artificial intelligence and machine learning), data bias (i.e. missing or mislabeled datasets), data security (i.e. sharing identifiable information), and other gender-blind tech that isn’t incorporating the voices of women and girls (e.g. bots, car crash dummies, and human resources software perpetuating harmful gender norms). Let’s dive a little deeper into these indirect harms by breaking down the different elements and terminology.

Algorithmic Bias –

Algorithms by themselves are not biased, but they are written by humans with unconscious bias. Every piece of digital technology is built with algorithms. An algorithm is basically a set of instructions that tells the computer what do. Algorithms are written by programmers or coders. To give a simplified example, if an algorithm is written to make a decision based on our world population and its instructions (a.k.a. code) say to look up “all data”, but neither the computer nor the programmer recognize that its “all” dataset is actually made up of 90% men and only 10% women, then clearly women are not being proportionally represented. This would not be a problem if our society was 90% male, but since our world population is roughly 50% male and 50% female, this algorithm is now erroneously amplifying male data to nearly the entire population, inadvertently making women proportionally very small and their concerns virtually invisible. If it’s not obvious, this is a huge problem for women.

Let’s think about what it would mean if this algorithm, or something like it, were used to hire new employees (e.g. Amazon), select people for loans (e.g. Apple Card), provide public services, or was even just meant to provide basic information to half of the population (e.g. Google search engine). It would mean that we are now hiring more men, providing more loans and public services to men, and all basic information is provided from the perspective of men. The example of 90% male and 10% female is an extreme and simplified example used to paint a clear picture of how an algorithm is only going to capture what it is told to look at and that it really matters how algorithms are written and to what datasets it has access.

Amazon and Apple Pay although, are real recent examples of algorithmic bias against women. Amazon built a machine learning tool that was only identifying male candidates before it was pulled. Apple Card is being investigated for giving higher credit limits to men than women based on their algorithm and they couldn’t explain why to customers. Research also shows that the language model currently used in Google’s search engine is also gender biased, perpetuating harmful gender stereotypes. These companies are looking and finding remedies for the problems mentioned above, but their existence begs for more scrutiny and a holistic approach to the problem.

Here we provide a few more definitions around algorithms. We have machine learning (ML) which are algorithms built to automate the analysis of data. ML algorithms are trained on specific datasets; or in other words, taught what to look for (by humans) with something like a training manual. ML is extremely common and is quite useful when you want to quickly group or categorize large amounts of data. ML can then be taken to another level when a ML algorithm is built to find its own training datasets; or in other words, write its own manual and teach itself. This type of ML is called “unsupervised learning”.

Even more advanced subsets of ML include deep learning (DL) and different neural networks (NN). Artificial intelligence (AI) is the vast and fluid term that currently encompasses all of what we have described (ML, DL, NN) and more, but will continue to change as computing gets more advanced. AI is defined as “the [process and mechanics] of making computers behave in ways that, until recently, we thought required human intelligence”. By this definition, examples of AI will only continue to expand, but currently is interchangeable with ML.

Some of the biggest problems in ML and AI today include the black box problem, concept drift, and overfitting. The black box problem can be a combination of two things, one, it could be about not having access to the original algorithm at all (e.g. intellectual property) or, two, it could also be about the algorithm getting so complex that even the creator is not able to explain the decision-making process anymore. In order for us to understand if there is algorithmic bias, whether or not it has the right proportion of male to female or if it’s original vision of the world population is skewed to favor men, we would need to review the original algorithm, original training dataset, as well as any changes it may have taken in the “learning” process. Knowing why an algorithm is making certain decisions can be difficult with unsupervised ML algorithms. Another concern to keep in mind, with ML when being used to predict future events or trends, is concept drift, when a variable (i.e. something around what you are trying to predict) shifts in an unforeseen way which causes the prediction to be less accurate over time. Finally, another concern, particularly in relation to gender bias, is overfitting. This is when an algorithm has been trained so much on a specific type of data (e.g. male) that it then has trouble identifying the slight differences in new data (e.g. female), thus producing output identical to the original data (e.g. male). It should be noted that overfitting is fixable, however it does require a lot of manual work for someone to program the specific differences into the algorithm. Work that may or may not be valued to outweigh costs.

Data Bias –

Data bias comes in many shapes and sizes, in analogue, and in digital. Data bias is nothing new, it’s just a larger concern when we’re talking about ML. One seemingly small bias becomes exponentially more significant and harder to detect the longer the algorithm or model runs. For example, let’s say we have a basic dataset to train our model for jobs. In this dataset all of the men are doctors, engineers, and construction workers and all of the women are nurses, secretaries, and teachers. This isn’t a problem in itself, since men can be doctors, engineers, and construction workers and women can be nurses, secretaries, and teachers, but since we’re talking about a computer and not a human, the computer will not be able to detect that women can be engineers unless we show it with data.

Now let’s say that this original model was used in a large firm to find new candidates for a job. An employee would search for engineering candidates and results would come up all men and one may think this is strange, but one could also think, “well, everyone knows that there aren’t a lot of women engineers, so I guess this makes sense that it’s all men… must be that pipeline problem” and move on to their next task. This of course is not because there are no women engineers, but because the algorithm was only able to identify men as engineers. This is a problem that would then reinforce gender stereotypes from the backend, meaning that it would be extremely difficult, if not impossible, to detect this problem as an average front end dashboard user.

This is one example based on Excavating AI to clearly paint the problem, but there are many different ways that data bias can affect outcomes. The most pressing issue in data bias is the overall absence of women and girls proper representation, particularly in training datasets. If they’re not properly represented in datasets, then it’s as if those concerns do not exist at all.

The three main categories of data bias in research are selection bias (planning), information bias (data collection), and confounding bias (analysis). Cognitive biases, in our case gender bias, can cut across all data biases. The result of gender bias in data are solutions or evidence that are either missing the entire picture with small scale impact, at best, or globally reinforcing gender stereotypes for generations, at worst. The world has been looking closer than ever at data bias because we are learning that the more our world goes digital, the more -isms will be exponentially encoded into our lives. Think about how quickly technology has evolved and about how it cuts across every single field of work. Data and tech are the linchpins to a sexist or equal future.

Data Security –

We’re going to look at data security from a GBV lens — this is largely about tech-solutions that have not taken the full risks associated with collecting and sharing one’s personal data into consideration. This applies to most gender equality projects since even the questioning of gender norms or empowerment of women (or questioning thereof) is often sensitive and political. When working with survivors of GBV, this is even more true.

The first understandable urge that new actors have when venturing into the field of gender equality or GBV is to find out prevalence data by location, but this could be dangerous. We would all like to know this information, but what is often overlooked is that gender equality and GBV data is complex and cannot be treated the same as other types of data. This is particularly the case for GBV incident data, but follows through with proxy indicators (which are quite similar, if not identical to gender equality indicators). Sharing the number of schools or students in any given region will likely not be problematic, but if the name and or location of GBV survivors or perceived women’s empowerment activists is shared with the wrong person or group, then the result can be backlash with increased violence or possibly even death.

When dealing with gender equality data, the entire process needs to come from a place of understanding that globally nearly one-in-three women have been subjected to physical or sexual violence in their lifetime and that most violence against women is perpetrated by an intimate partner. This means that just asking questions about either gender equality or GBV could potentially be putting one-in-three women at risk. Thus, all gender equality projects should be carefully examined if the benefits outweigh the risks.

Fully assessing the risk of gender equality and GBV projects data collection is crucial for women’s security.

As a couple additional notes when specifically handling GBV incident data, it’s also important to recognize that GBV data is massively underreported, thus anyone using the data must understand the limitations and possible algorithmic bias if intended to be used in ML tools. Additionally, collecting this type of information without proper GBV response services already in place is ethically questionable.

Gender-Blind Tech –

Gender-blind tech is any tech solution that has been created without analyzing how a product might affect men and women differently. This means looking not only at whether or not the product is more or less harmful for different genders but also whether or not it is more or less useful. The consequences of not taking gender into consideration can vary significantly. Best case, the tech will have the same negative and positive results for men and women, but worst case it could have only positive results for men and only negative results for women. This really goes for any tech product, digital or not. A lot of the examples given in this article are gender-blind tech that likely could have been avoided or minimized had we taken gender into consideration from the beginning.

Although we cannot objectively say that any tech is truly gender-blind unless the creator explicitly admits that they did not conduct a gender impact analysis, we can make assumptions based on the information that we do have.

Let’s take a look at Siri as an example. Siri is a female-voiced bot, or virtual assistant, intended to assist its millions of daily users with information. When a user says “Hey Siri, You’re a bi***” or other crude sexual commands the response is a flirtatious “I’d blush if I could”. This is not proof of gender-blind tech, but it is clearly an indicator of a bigger problem. When we look closer at the inner-workings of Siri, Alexa, and Google Home we see that they all use ML and operate with natural language processing (NLP), using large language models (LM) that often include gender stereotypical word embeddings (e.g. man is to computer programmer as woman is to homemaker). This means that, in addition to Siri’s flirtatious response to verbal sexual harassment, Siri is also programmed to respond with gender biased responses.

Although the companies who have created these bots are allegedly working on bias, I think it’s safe to say that there was no thorough gender impact assessment and Siri is most definitely in the gender-blind tech category.

Unless we deliberately analyze and include the voices of diverse women and girls, tech will continue to be created by and for “default men”

Written by Stephanie Mikkelson a Development Practitioner and Global Researcher focusing on responsible gender data and digital solutions for large INGOs and UN agencies.

This post was originally posted on her Medium blog.